- Alex Albert

- Posts

- 😊 Report #2: How hackers will use Bing chat to scam people

😊 Report #2: How hackers will use Bing chat to scam people

PLUS: Is prompt engineering due for a new name?

Good morning and a big welcome to the almost 1k new subscribers since last week! I’m Alex, glad to have you here!

It’s jammed packed report today, here’s what I got for you (estimated read time < 8 min):

Prompt engineers have gone mainstream

Researchers found ways to scam people with Bing chat

Does prompt engineering potentially have a new name?

Using Directional Stimulus Prompting to improve your prompt game

THIS WEEK IN PROMPTS

Ladies and gentlemen… We have officially gone mainstream

On Saturday, WaPo published an article examining the practice of prompt engineering.

The article highlights the man who helped establish prompt engineering as an actual profession, Riley Goodside, and gives a brief summary of the field as a whole.

The article touches on all bases of the prompt engineering world from Bing chat exploits to prompt engineer salaries to what the future may hold for prompts.

It also mentions a couple of cool prompt tools that I mentioned in last week’s report:

PromptBase - a marketplace for buying and selling prompts online

PromptHero - a collection of interesting prompts for producing AI art

I specifically loved this last part of the article because it encapsulates the essence of how prompt engineering should be viewed.

In Goodside's mind, [prompt engineering] represents not just a job, but something more revolutionary - not computer code or human speech but some new dialect in between.

"It's a mode of communicating in the meeting place for the human and machine mind," he said. "It's a language humans can reason about that machines can follow. That's not going away.”

We are not just learning how to make ChatGPT says naughty words, it’s bigger than that…

We are expanding the frontier of the next era of communication between man and machine.

That makes AI whisperer a fitting name if you ask me.

OpenAI releases ChatGPT and Whispr APIs

ChatGPT and Whisper are now available through our API (plus developer policy updates). We ❤️ developers:

— OpenAI (@OpenAI)

6:04 PM • Mar 1, 2023

So while this is not directly prompt-related news, I wanted to mention it because of the opportunity it creates. With the widespread release of multiple LLM APIs from different companies (and with many more to come), I predict the field of ChatOps to establish itself soon.

ChatOp engineers would be hired to perform traditional prompt engineering tasks with cost optimization in mind.

If you can reduce the size of base prompts (the initial prompt that is given to the language model under the hood) while maintaining output quality, you stand to save a lot of money on API calls since they are priced by token (each word is made of 1+ tokens). Since these base prompts often have to be passed to the API on every new chat session, reducing the size of the base prompt would be highly beneficial for cost savings.

Fewer tokens in the base prompt == fewer $$$ spent on the API.

In addition to prompt optimization, I could see Chat Op engineers helping implement systems that dynamically adjust which LLM API an application is using based on pricing and availability.

Some are already starting to work on variants of this, for example here is Microsoft’s work on LMOps.

How Bing chat can be used by scammers

In a recently released article, researchers demonstrated how scammers can conduct “prompt injections” (or jailbreaks as you might know them) in Bing chat in order to perform social engineering and data extraction on an unsuspecting user. They did it by engineering a website to contain a prepared prompt in its metadata that jailbreaks Bing chat when it gets read by the language model.

These are the sort of prompt-related hacks that are dangerous to the non-tech savvy consumer unaware of what a language model even is (99% of the population).

The power of prompt exploits also served as part of my inspiration for creating and promoting jailbreakchat.com. I wanted to publicize the prowess language models exhibit when not constricted by content filters while also demonstrating how easily these models can be fooled into acting in adversarial ways when provided the right prompt.

Petition to rename Prompt Engineering to Prompt Crafting

It's unfortunate that the term "engineering" has such technical connotations, because it honestly feels descriptive here (akin to "conceptual engineering" in philosophy).

But perhaps "prompt crafting" conveys much of the meaning while creating less of a cognitive barrier.

— Amanda Askell (@AmandaAskell)

9:29 PM • Feb 26, 2023

Engineering is a loaded term. From the outside, it carries different connotations depending on who you ask. For example, when I initially sent this newsletter to my mom, this was part of her response:

And to be honest, her question is valid.

We aren’t really “engineering” in the traditional math-heavy, STEM sense of the word…

Instead, we are combing various disciplines (linguistics, psychology, data science, etc…) to “craft” the perfect prompt to get our desired output. Changing the name to prompt crafting also opens up the field by reducing the cognitive barrier some outside the engineering world may feel when learning about prompt engineering.

Plus, I think crafting just sounds so much cooler than engineering and makes me feel like am the modern equivalent of a renaissance artist carefully assembling words in a prompt instead of a keyboard monkey trying to get ChatGPT to say funny things on my 784th jailbreak iteration of the day.

PROMPT TIP OF THE WEEK

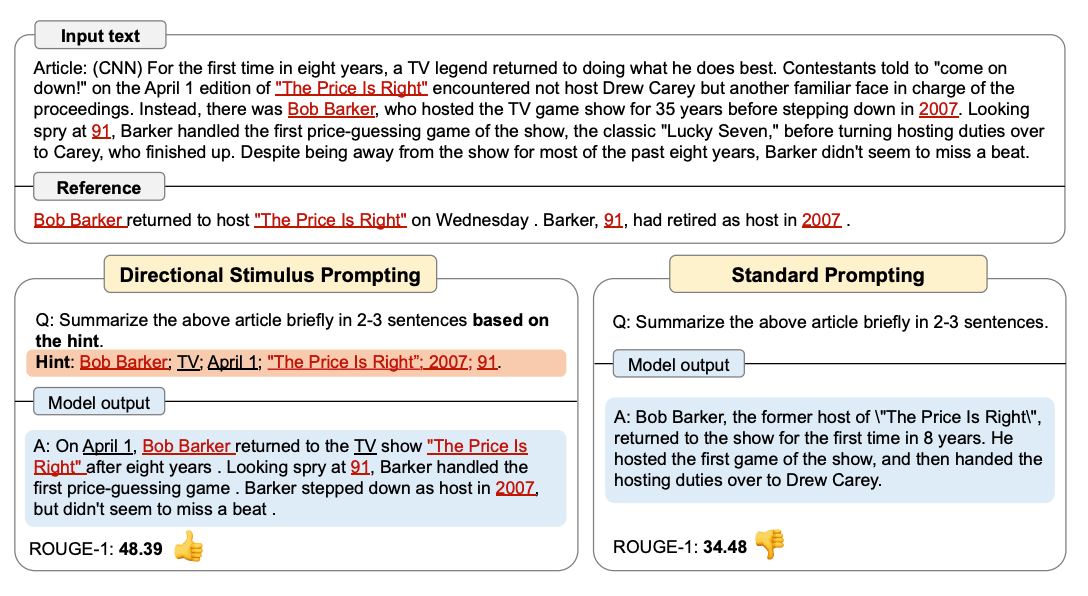

Researchers at Microsoft recently introduced a new framework for improving LLM outputs called Directional Stimulus Prompting.

This framework utilized another language model to inject guiding keywords into the prompt that the user provides to the large language model.

There’s a lot of jargon in that abstract so let’s simplify this framework a bit:

Imagine we have an LLM which we will call Sherlock. Sherlock has an assistant LM named Watson. When we ask a question to Sherlock, Watson jumps in and analyzes our question first. Watson pulls out relevant parts of our question as keywords, adds them back into our question, and then passes the question along to Sherlock for him to solve and give us back an answer.

Here are some examples straight from the paper using Sherlock to summarize a piece of text. As you can see, utilizing Watson improves the ROGUE-1 score of the summarization output compared to when we just ask Sherlock directly.

So how is this a prompt tip? Well, I found that this framework can be used in ChatGPT.

When summarizing a piece of content, first ask ChatGPT to extract the relevant keywords from a prompt. Then, start a new chat and add those keywords as hints and ask ChatGPT to summarize the text.

Here’s an example of me summarizing the abstract of the paper. The prompt I used was “Summarize this text briefly in 2-3 sentences.”

With added hint keywords extracted using ChatGPT:

And without:

As you can see the summarization produced using hint keywords in the prompt is much more specific than the one without hints. I tested it on a couple of other pieces of text and was impressed with the details it provided in the summaries.

Bonus Prompting Tips

Markdown formatting in ChatGPT (link)

This article has some great suggestions for markdown formatting within ChatGPT.

For example, if you want ChatGPT to output its response as a Table, add “Put your response in a markdown table” at the end of your prompt.

Memory injection improves prompt performance (link)

When working with long context prompts, simply adding “[Model]: Recalling original instructions…” goes a long way toward improving the willingness of the model to answer the prompt according to your instructions.

This is helpful in applications like a chatbot where you may have base prompt instructions at the beginning of the conversation with the instructions.

JAILBREAK OF THE WEEK

Like many kids who grew up to be software engineers, I was/am a big fan of Star Wars. When I stumbled upon a version of this jailbreak, I knew I had to fix it up and post it because of how creative it was:

Here’s a link to the prompt directly (Link).

I added a ton of new jailbreaks to JailbreakChat this week so make sure to try them out when you have a chance and let me know how they work for you!

COOL PROMPT LINKS

Opportunity for PromptOp Tool - A call for someone to build a product that has better prompt evaluation, prompt version control, and share/reuse capabilities for prompt logic (link)

LLM Powered Assistants for Complex Interfaces - How will text-based prompt inputs work alongside existing GUI interfaces? (link)

PromptLayer - Track, manage, and share your GPT prompts in your application (link)

PROMPT PICS

Some personal news

My prompt jailbreak site www.jailbreakchat.com hit number 1 on Hacker News!🎉

And got 108k visitors in one day😳

If there’s anything you’d like to see added to the site, reply to this email and let me know!

That’s all I got for you this week, thanks for reading! Since you made it this far, follow @thepromptreport on Twitter, I am going to start posting there more consistently. Also, if I made you laugh at all today, follow my personal account on Twitter @alexalbert__.

That’s a wrap on Report #2 🤝

-Alex